Kosmos-2 could be revolutionary for Embodiment AI.

- New research funded by Microsoft delves into AI physicality.

- The language model, Kosmos-2, was trained to perceive spatial concepts.

- It also comes with its own knowledge of space.

Microsoft has been putting a lot of budget into funding AI research lately. Orca 13B is open source to the public after a team of researchers assembled and funded by Microsoft built it.

LongMem is Microsoft’s hope for unlimited context length in AI models. And it’s also a product of research funded by the Redmond-based tech giant.

Phi-1, a new language model for coding, is capable of learning and developing knowledge on its own. Microsoft funded the research for it.

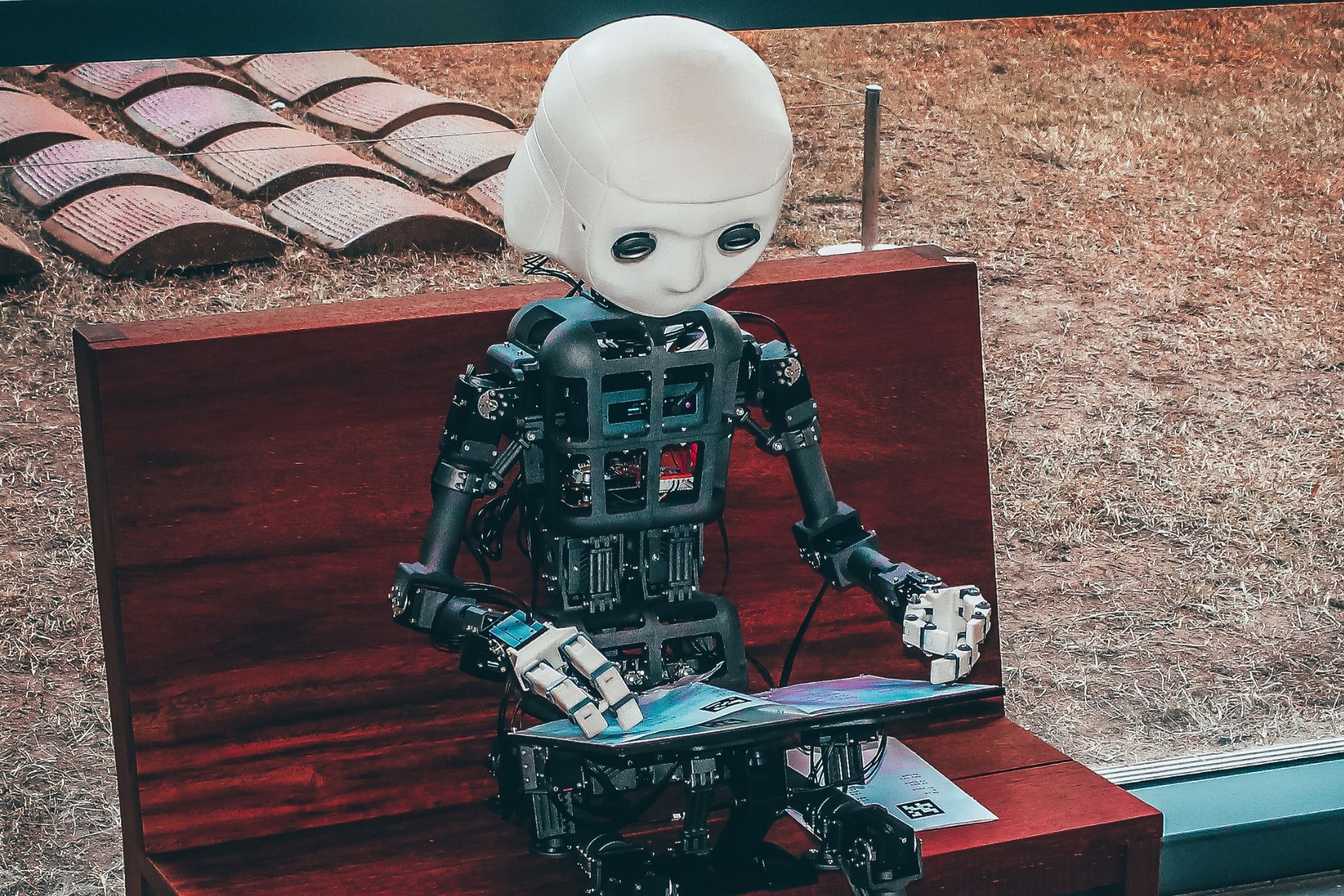

And it seems Embodiment AI is the next quest in AI development. But Microsoft might just have the answer with another research on AI. This time it’s about Kosmos-2, a new AI model that lays the foundation for Embodiment AI.

Microsoft’s Kosmos-2 is the Embodiment AI prototype

Maybe this is the first time you hear about Embodiment AI. Well, the name is pretty suggestive in itself. So what is Embodiment AI, you might ask?

Embodiment AI is a field of artificial intelligence that focuses on the development of intelligent agents that have a physical body and can interact with the world in a meaningful way.

The concept is based on the idea that the physical body plays a significant role in how an agent learns and makes decisions.

In other words, if AI would have a body and would move, then it could learn from this and respond and form answers, as well as interact accordingly. And if you think we enter science fiction territory, hold your ground. AI was always supposed to become physical.

According to the research, Kosmos-2 is a language model that enables new capabilities of perceiving object descriptions (e.g., bounding boxes) and grounding text to the visual world. The researchers represented refer expressions as links in Markdown, i.e., “text span”, where object descriptions are sequences of location tokens.

Together with multimodal corpora, they constructed large-scale data of grounded image-text pairs (called GrIT) to train the model. In addition to integrating the existing capabilities of MLLMs in Kosmos-2, the model also integrates the grounding capability into applications.

This means the language has taken steps forward into perceiving space and coming up with its own perception, action, and world modeling. The researchers think this way Kosmos-2 is the foundation for a physical AI. You can read the research here.

What do you think about Microsoft Kosmos 2? Would it be good if AI has a physical form or not? Let us know in the comments section below.

Start a conversation

Leave a Comment