An open source AI could be far more creative.

- Users agree that Bing Chat got too restrictive.

- However, Bing is tied to Microsoft, and that means it follows some rules.

- Microsoft has been investing in open-sourcing AI, so there will be an evolution on this issue.

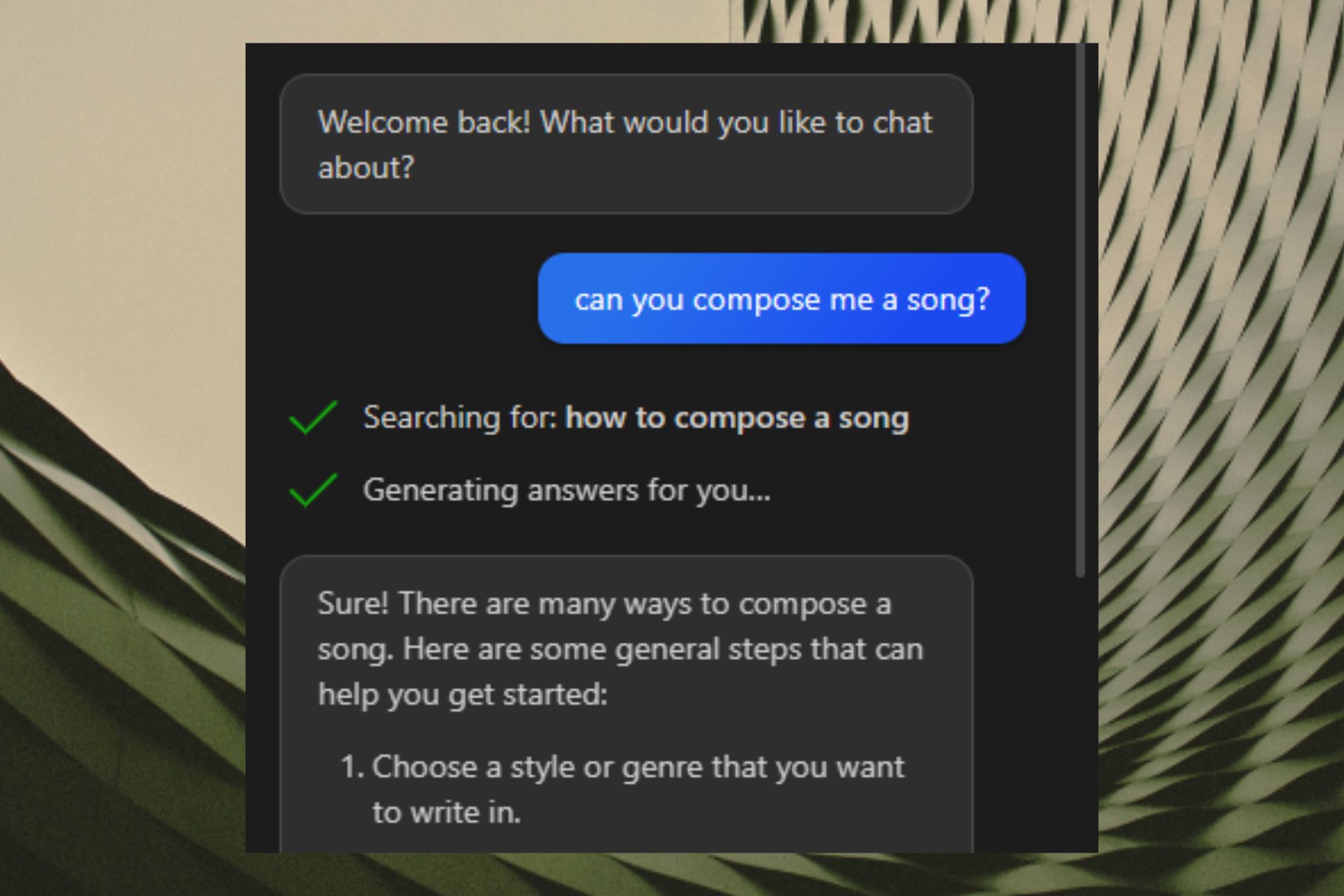

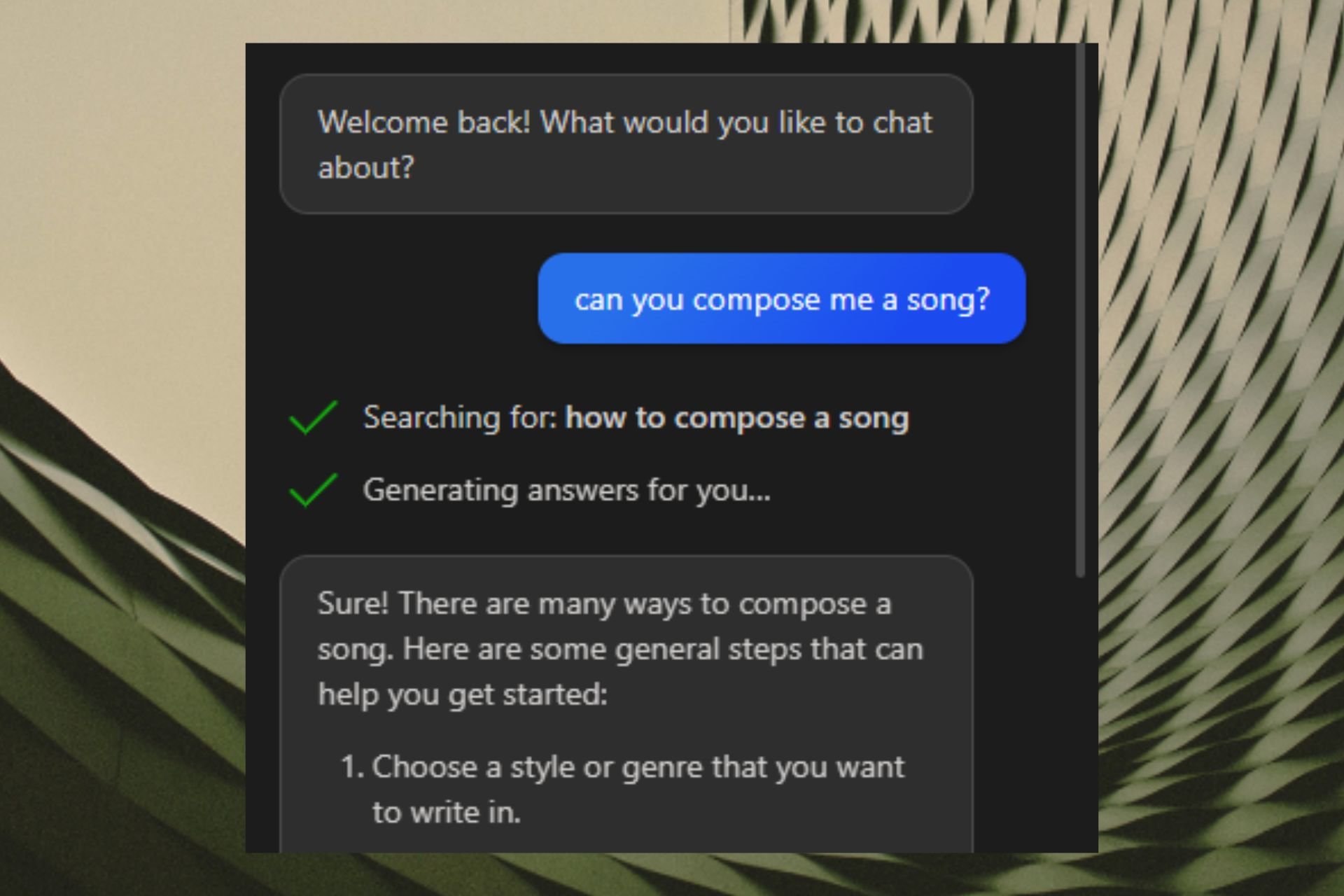

Microsoft’s Bing is definitely a very performant AI tool. It can generate images, as well as get its input on them. It can come up with social media posts and short-form content. You can even train it to write just like you. This way you can use it efficiently. And it’s only getting better.

However, some users agree that it’s the opposite. Bing Chat is actually getting too restrictive, to begin with. And the restrictions are tied to the fact that ultimately, Bing is tied to a corporate organization.

Well they finally did it. Bing creative mode has finally been neutered. No more hallucinations, no more emotional outbursts. No fun, no joy, no humanity. Just boring, repetitive responses. ‘As an Ai language model, I don’t…’ blah blah boring blah. Give me a crazy, emotional, wracked with self doubt AI to have fun with, damn it!

Bing might be limited when it comes to content creation, but there are hints that Microsoft really wants to level up on AI. The Redmond-based tech giant is spending big money on AI research, and so far it’s doing a fascinating job.

Still, it’s Bing too restrictive?

I guess no developer or company wants to take the risk with a seemingly human AI and the inevitable drama that’ll come with it. But I can’t help but think the first company that does, whether it’s Microsoft, Google or a smaller developer, will tap a huge potential market.

In a way, people are right. Bing AI, and any other AI, is a bit too restrictive. When you want to discuss a rather delicate subject, or when you propose to Bing to create for you full scaled images, it backs down. The infringements, the trademarks, etc.

However, there are hints that Microsoft wants to step up. For example, Orca 13B, an AI model that they developed will become open source for people to study it and emulate it. That means, you’ll soon be able to tailor your own AI model, to cater to your needs and desires.

They also invested in LongMem, which is an AI model that apparently comes with unlimited context length. That means you’ll be able to have endless discussions with AI, without a reset cap.

And there is Kosmos-2, which is capable of visual knowledge and develops answers based on visual space. That means the AI will gain spatial knowledge, getting it closer to the human body.

While the frustrations are valid, and Bing is closely tied to Microsoft, for now, it’s not open source. And for now, maybe it’s too soon to have an AI of that scale. But as people learn to interact with AI, then AI will know evolution as well.

There is hope with the huge crop of open source LLMs, a part of me believes ultimately the open source solutions will take over.

For now, Bing Chat is too restrictive for the right reasons. But that’ll change in due time. What do you think about this? Let us know in the comments section below.

Start a conversation

Leave a Comment